IT organizations are continuously looking for new ways to drive operational and cost efficiencies and the data center is no exception. Data center staff want to take advantage of the benefits of a subscription-based cloud consumption model, but often don’t have the ability or budget to move their data or compute resources to the cloud. As a result, IT leaders are looking for ways to leverage resource consumption in a pay-as-you-go cloud model but within an on-premises infrastructure.

IT organizations are continuously looking for new ways to drive operational and cost efficiencies and the data center is no exception. Data center staff want to take advantage of the benefits of a subscription-based cloud consumption model, but often don’t have the ability or budget to move their data or compute resources to the cloud. As a result, IT leaders are looking for ways to leverage resource consumption in a pay-as-you-go cloud model but within an on-premises infrastructure.

ESG research backs this up, with 48% of IT leaders saying they would prefer to pay for on-premises data center infrastructure through a consumption-based model.1

|

48% of organizations say they would prefer to pay for infrastructure via a consumption-based model such as a variable monthly subscription based on hardware utilization, which is up from 42% last year. |

Source: Enterprise Strategy Group

1Source: ESG Research Report, 2021 Technology Spending Intentions Survey, January 2021.

IBM was paying attention to these market trends and in the third quarter of 2021, they introduced new ways to expand storage consumption options with IBM storage-as-a-service offerings.

IBM offering storage as a consumption model

In a recent briefing covering IBM’s Storage launch, Eric Herzog stated “IBM storage-as-a-service offers a lower price than the public cloud.” I like this pay-as-you-go consumption model compared to a forced move to the cloud, where everything moves straight to the cloud. In the IBM storage-as-a-service model, organizations are able to move to the cloud yet keep some storage on-premises using the same subscription financial model. The beauty of this approach is that it offers a hybrid cloud solution with a concierge service to continually health check the client’s systems.

It is an interesting approach and shows that IBM is keeping up with and responding to storage industry trends about how customers are looking to purchase IT resources.

Not surprisingly, IBM is also responding to the future promise of quantum computing.

Quantum computing is not a myth!

In a different briefing this week, IBM’s Chief Quantum Exponent Robert Sutor explained that “…quantum computers will solve some problems that are completely impractical for classical computers…” The conversation with Robert was impressive. He explained to me the history of IBM’s background in the quantum computing space and said, “There are differences when describing quantum computing.” Sutor offers a lot of depth on this topic in his book, Dancing with Qubits: How quantum computing works and how it can change the world.

The IBM Institute for Business Value (IBV) has been deeply engaged with quantum research for many years. In June of this year, IBM announced advancements with its Quantum System One, delivering its first quantum computer system outside of the US to Europe’s largest application-oriented research organization, Fraunhofer-Gesellschaft. And the second system is being delivered to the APAC region soon. IBM, as usual, is on the forefront of innovation and driving paradigm shifts.

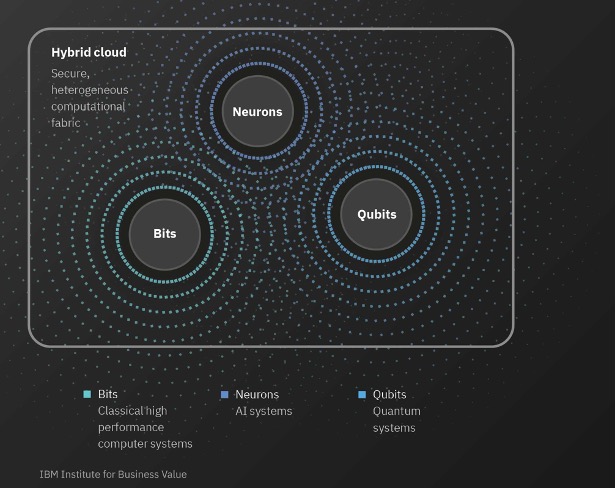

Many people, even tech-savvy ones, do not fully understand the potential in quantum computing processing power. This diagram provides the “gap” between classical computing of today and how quantum computing will enhance hybrid cloud and the overall heterogenous computational fabric. These cloud-based, open-source development environments will make using quantum computers “frictionless,” according to IBM.

The number of qubits (or quantum bits) in a computer make a big difference in computational performance, according to Robert. By 2023, IBM will expand its current quantum computer from 65 qubits to over 1,000 qubits. It is an ambitious goal and exciting at that same time. It is not quite like leaping through space and time like Sam Beckett did in the TV show Quantum Leap, but IBM’s future focus in this area is impressive, nonetheless.

The next phase in quantum computing? Beyond its continued technological evolution, IBM should evangelize its benefits more broadly so that organizations are well positioned to take advantage of all it has to offer. Hopefully, next year this time, I’ll speak with IBM again and see the beginning of more widespread adoption of quantum computing.

IBM seems to be doing the right things

I like the direction that IBM is taking with the storage roadmap. The improvements in performance offered to the IBM DS8900F analytics machine and the IBM TS7770 all flash VTL improve AI responsiveness and resiliency, which seems to provide an advantage over other systems in the same class. We also were briefed on the importance of IBM’s strategy with FlashSystem Safeguard Copy and how it improves data resilience by providing an immutable point-in-time of production data, isolated logical air-gap offline data, and separate privileges for admins.

Paul’s POV

In my opinion, IBM is listening to customers. The response with the roadmap in storage as well as quantum is aggressive yet will keep them competitive. Customers will ultimately decide for themselves whether these are the right approaches, but from my vantage point, IBM is checking the right boxes to address many common business pain points right now as well as those that are expected in the future. It will be interesting to see how the competitor landscape will respond to these new advances in IBM’s portfolio.